TL;DR:

Generative AI models, such as GANs and VAEs, have shown great potential in applications like image synthesis, natural language processing, and data augmentation. Open-source libraries like TensorFlow, Keras, and PyTorch provide pre-built modules for implementing and training generative models. Popular open-source generative AI models include DCGAN, VQ-VAE, and StyleGAN. To get started, choose the suitable model for your project, prepare your dataset, fine-tune and optimize the model, and evaluate its performance using appropriate metrics. Once satisfied, you can deploy your generative AI model in real-world applications.

Introduction

Generative AI is a rapidly evolving field that focuses on developing algorithms capable of creating new data samples that resemble the input data. These algorithms have shown great potential in various applications, including image synthesis, natural language processing, data augmentation, and more. OpenAI, a research lab dedicated to creating and promoting friendly AI, has significantly contributed to developing generative AI models. This article will explore various open-source generative AI models and provide tutorials to help you get started with these cutting-edge technologies.

Understanding Generative AI Models

Generative Adversarial Networks (GANs)

GANs consist of two neural networks, a generator and a discriminator, trained together in a competitive setting. The generator creates new data samples while the discriminator evaluates the authenticity of the generated samples. The generator aims to produce indistinguishable samples from the original dataset, while the discriminator tries to distinguish between actual and generated samples. Through this adversarial process, both networks improve their performance.

Popular GAN architectures include DCGAN (Deep Convolutional GAN), StyleGAN, and CycleGAN. GANs have shown remarkable success in image synthesis, style transfer, and generating realistic human faces.

Variational Autoencoders (VAEs)

VAEs combine generative models and autoencoders, a type of unsupervised learning algorithm. VAEs learn to encode input data into a lower-dimensional latent space and then decode it back into the original data format. During the training process, VAEs optimize the parameters of the encoder and decoder networks to minimize the difference between the input and reconstructed data and impose a specific structure on the latent space. Once trained, the decoder part of the VAE can generate new data samples by sampling from the latent space.

Popular VAE architectures include VQ-VAE (Vector Quantized VAE) and β-VAE. VAEs have been successfully applied in image generation, text generation, and music synthesis.

Open Source Generative AI Models

TensorFlow and Keras

TensorFlow is an open-source machine learning library developed by Google, and Keras is a high-level neural networks API that runs on top of TensorFlow. Both libraries provide pre-built modules for implementing and training generative models like GANs and VAEs.

To get started with TensorFlow and Keras, you can follow the official tutorials and examples available on their websites:

PyTorch

PyTorch is a popular open-source machine learning library developed by Facebook, which provides a flexible platform for building and training generative models. Like TensorFlow, you can find various examples and tutorials on their official website and GitHub repositories.

To get started with PyTorch, you can follow the official tutorial on implementing a DCGAN:

DCGAN

DCGAN is an open-source GAN architecture that uses convolutional layers in both the generator and discriminator. It has been widely adopted for generating images due to its simplicity and effectiveness. The official TensorFlow implementation can be found here: https://github.com/tensorflow/tensorflow/tree/master/tensorflow/examples/generative

To get started with DCGAN using TensorFlow, you can follow the tutorial available on the TensorFlow website:

TensorFlow DCGAN tutorial: https://www.tensorflow.org/tutorials/generative/dcgan

This tutorial will guide you through implementing a DCGAN using TensorFlow, training the model on the MNIST dataset, and generating new images of handwritten digits.

OpenAI's VQ-VAE

Vector Quantized Variational Autoencoder (VQ-VAE) is a generative model developed by OpenAI that uses discrete latent representations. This allows for better control over the generated output and improved performance in some applications. The official implementation can be found here: https://github.com/openai/vq-vae

To get started with VQ-VAE using PyTorch, you can follow the tutorial available on OpenAI's GitHub repository:

OpenAI VQ-VAE tutorial: https://github.com/openai/vq-vae/blob/master/vq-vae.ipynb

This Jupyter Notebook tutorial will walk you through implementing and training a VQ-VAE using PyTorch and generating new images using the trained model.

StyleGAN

StyleGAN is a GAN architecture developed by NVIDIA that focuses on generating high-resolution and high-quality images. StyleGAN2 is an improved version of the original StyleGAN. The official implementation can be found here: https://github.com/NVlabs/stylegan2

To get started with StyleGAN, you can follow the tutorial available on NVIDIA's GitHub repository:

StyleGAN tutorial: https://github.com/NVlabs/stylegan2/blob/master/README.md

The tutorial provides an overview of the StyleGAN architecture, installation instructions, and guidance on training and generating images using the pre-trained models.

Getting Started with Open-Source Generative AI Models

Selecting the suitable generative AI model for your project

Choosing the appropriate generative AI model depends on your specific use case, the type of data you're working with, and the desired level of control and quality of the generated output. GANs are generally better suited for generating high-quality images, while VAEs may be more appropriate for tasks requiring finer control over the generated output.

Preparing your dataset for training

Before training your generative AI model, you'll need to prepare your dataset. This typically involves data cleaning, normalization, and splitting the data into training and validation sets. You may need to resize or crop the images to a consistent size for image datasets.

Fine-tuning and optimizing your model

Once your dataset is prepared, you must train your generative AI model. This involves selecting the appropriate hyperparameters, such as learning rate, batch size, and the number of training epochs. You may need to experiment with different settings to find the optimal configuration for your specific task.

Evaluating the performance of your generative AI model

Evaluating the performance of generative AI models can be challenging, as traditional metrics like accuracy may not be applicable. You can use metrics like the Frechet Inception Distance (FID) or the Inception Score (IS) to assess the quality of the generated samples.

Deploying your generative AI model in real-world applications

Once you're satisfied with the performance of your generative AI model, you can deploy it in a variety of applications, such as image synthesis, data augmentation, or even generating text or music. Depending on your needs, you may deploy the model on a server, in the cloud, or even on edge devices for a real-time generation.

Conclusion

The growing field of generative AI has led to the development of many open-source generative AI models, making it easier than ever for developers to implement and experiment with these cutting-edge.

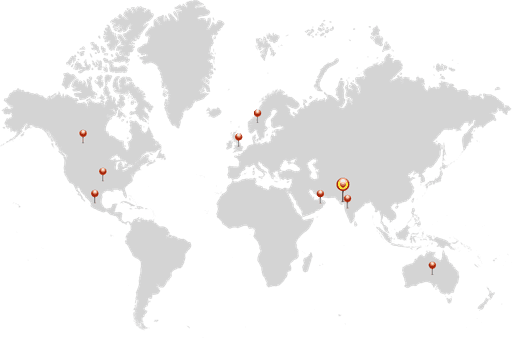

We are a family of Promactians

We are an excellence-driven company passionate about technology where people love what they do.

Get opportunities to co-create, connect and celebrate!

Vadodara

Headquarter

B-301, Monalisa Business Center, Manjalpur, Vadodara, Gujarat, India - 390011

Ahmedabad

West Gate, B-1802, Besides YMCA Club Road, SG Highway, Ahmedabad, Gujarat, India - 380015

Pune

46 Downtown, 805+806, Pashan-Sus Link Road, Near Audi Showroom, Baner, Pune, Maharashtra, India - 411045.

USA

4056, 1207 Delaware Ave, Wilmington, DE, United States America, US, 19806

Copyright ⓒ Promact Infotech Pvt. Ltd. All Rights Reserved

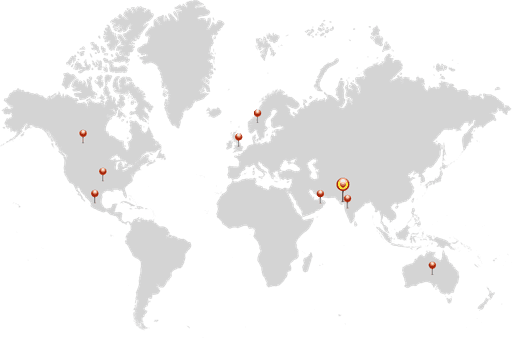

We are a family of Promactians

We are an excellence-driven company passionate about technology where people love what they do.

Get opportunities to co-create, connect and celebrate!

Vadodara

Headquarter

B-301, Monalisa Business Center, Manjalpur, Vadodara, Gujarat, India - 390011

Ahmedabad

West Gate, B-1802, Besides YMCA Club Road, SG Highway, Ahmedabad, Gujarat, India - 380015

Pune

46 Downtown, 805+806, Pashan-Sus Link Road, Near Audi Showroom, Baner, Pune, Maharashtra, India - 411045.

USA

4056, 1207 Delaware Ave, Wilmington, DE, United States America, US, 19806

Copyright ⓒ Promact Infotech Pvt. Ltd. All Rights Reserved